Scrubbing and Enriching Data Effectively

Zeroing On Business Problems: The Most Important Tenet

There is an exceptional surge in data generation—transforming every facet of human

existence and presenting various opportunities for organizations to extract value. Yet,

despite this abundance, a significant gap exists; that many enterprises struggle to realize

the full potential of their data. This dilemma, commonly referred to as the Data Paradox,

creates a situation where organizations are surrounded by an overwhelming deluge of data

while simultaneously facing a drought of actionable insights.

What is Data Paradox?

The term "data paradox" refers to situations where the abundance of data leads tounexpected challenges or contradictions, often complicating decision-making processes.

Despite the assumption that more data equates to better insights, organizations frequently

encounter issues that hinder effective data utilization.

Key Aspects of the Data Paradox:

Many organizations report having more data than they can manage. According to The

Hindu, a study by Forrester Consulting for Dell Technologies found that while 71% of

respondents felt they needed more data, 82% admitted to having more data than they

could handle.

Large data sets can suffer from biases that lead to inaccurate conclusions. According to

news.harvard.edu , during the COVID-19 pandemic, surveys with vast sample sizes, such

as the Delphi-Facebook study, overestimated vaccination rates due to nonresponse bias.

• Data Silos and Fragmentation

Despite the availability of vast amounts of data, its fragmentation across different

departments or systems can impede comprehensive analysis. According to The Hindu, this

siloed data environment prevents organizations from gaining holistic insights, thereby

limiting the potential benefits of their data assets.

• Quality vs. Quantity Dilemma

The focus on accumulating large volumes of data can sometimes overshadow the

importance of data quality. According to news.harvard.edu, poor-quality data can lead to

incorrect analyses and decisions which negates the advantages of having extensive data

sets.

• Ethical and Privacy Concerns

The proliferation of data collection raises significant ethical and privacy issues. As per

Smart Data Collective , organizations must navigate the delicate balance between

leveraging data for insights and respecting individual privacy rights to ensure compliance

with regulations and maintaining public trust.

Data Management in Retail Industry: Put into Perspective

This is where a robust framework helps dissect a problem logically which allows each

component to address specific challenges. When these components are combined, they

form a comprehensive guide to problem-solving.

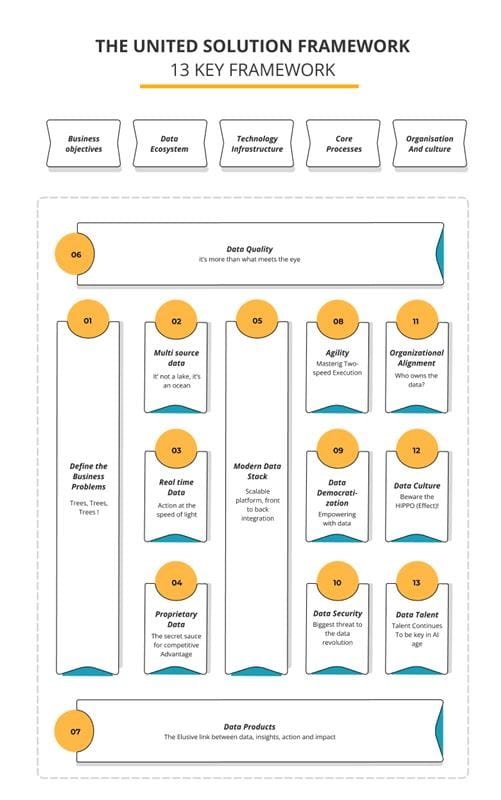

Understanding the Unified Solution Framework

What is the Unified Solution Framework?

emphasizes defining business problems, integrating diverse data sources, building

scalable technology infrastructures, and nurturing a data-driven culture.

When Did the Need for a Framework Arise?

explosion over the last decade. Traditional methods of data management fell short of the

complexities and scale of Big Data. Hence, businesses are used to staying in a cycle of

massive investment without gaining significant insights or valuable business outcomes.

Why is the Unified Solution Framework Essential?

helps organizations overcome common pitfalls—like data silos, poor data quality, and

misalignment—while facilitating quick responses to shifting business demands. It allows

companies to transform data into actionable insights.

How Does the Unified Solution Framework Work?

definition of business objectives and narrowing down data requirements. It supports

organizations in the integration of multi-source data, leveraging both real-time and

proprietary data, and constructing a modern data stack.

Where Can the Unified Solution Framework Be Applied?

including healthcare, finance, retail, and manufacturing. Whether an organization is

beginning its data journey or seeking to optimize existing initiatives, the framework offers a

scalable and adaptable solution.

The 13 key components of Unified Solution Framework

The 13 key components of Unified Solution Framework

Fetching loads of data doesn't support productive decision-making. Organizations can

draw incorrect conclusions and make poor judgments without a clear understanding of the

business problem they aim to solve. Therefore, it's crucial for businesses to invest time

and effort to accurately define and narrow down these problems before delving into data

exploration.

One effective method to achieve this is by constructing KPI trees. KPI trees allow

organizations to break down business challenges into manageable components to identify

the vital drivers that will affect outcomes. With these drivers in mind, businesses can

pinpoint the necessary data and build streamlined data pipelines which reduce complexity

and enhance manageability of Big Data.

2. Multi-source Data

In today's data-driven world, a substantial amount of valuable data lies outside of

organizations. With newer touchpoints emerging continuously, data sources are

diversifying rapidly. Relying solely on internal data is no longer a sustainable strategy,

hence organizations must incorporate external data to drive insightful decision-making.

Integrating multiple data sources—both external and internal—can significantly enhance

the depth and quality of insights derived. However, this integration also comes up with

challenges due to the greater variety and complexity of data.

3. Real-time Data

In our fast-paced digital age, the ability to respond quickly is important. Real-time data has

emerged as a game-changer as it enables organizations to react rapidly to changing

conditions. The constant inflow of real-time data is substantial but also cause

management challenges. Organizations must develop technological infrastructures

capable of supporting a high-velocity data management value chain to ensure that data

treatment is instantaneous across all management levels.

However, it's essential to recognize that not every business problem requires real-time

data. Storing and analyzing this type of data is both laborious and costly. Organizations must, therefore, identify specific use cases that call for real-time data by weighing the

4. Proprietary Data

While much data is available externally, the unique tacit knowledge held within an

organization can create a vast difference. This knowledge, derived from years of

experience and accomplishments, can be transformed into proprietary information as it

can provide a competitive edge in a data-centric world. Capturing and codifying this tacit

knowledge fosters the development of a “knowledge cycle,” where knowledge creators

and seekers collaborate which can result in repeatable competitive advantages.

5. Modern Data Stack

As organizations identify their business problems and experience a variety of data inflows,

the need for a robust foundational platform is needed. The Modern Data Stack is crucial for

organizing data initiatives. With outdated legacy systems often unable to process

expansive and complex datasets, the need for a modern solution is also required.

The Modern Data Stack must include integrated components hosted on the cloud which

allows vast scalability and adaptability. Key technological shifts include moving from on-

premises infrastructure to the cloud which enables organizations to scale on demand. In

addition, the transition from batch processing to real-time infrastructure allows for agile

responses to shifting business needs.

6. Data Quality

Quality is essential in data initiatives. High-quality data is important to ensure reliable

decision-making and actions. Unfortunately, the complexities of big data often lead to

quality issues arising from various sources and pipelines. Organizations must adopt a

context-first approach to data quality, assessing it based on the business problem, rather

than relying on traditional dimensions of measurement.

7. Data Products

To address the challenges that come with Data Paradox, organizations must go for

"productization" of data. Data products are digital tools designed to deliver specific

outcomes and accelerate data management cycles by providing readily available solutions. By identifying repeatable data assets and transforming them into products,

8. Agility

Organizations face the daunting task of managing vast data quantities, often causing

initiatives to extend over lengthy timelines. To prevent becoming irrelevant, companies

need agility. A two-speed approach allows organizations to deliver quick solutions to

pressing business problems while simultaneously working on long-term capabilities.

9. Data Democratization

Furthermore, data must be democratized within organizations; the paradigm of limited

access to information is outdated. By breaking down data silos and fostering self-serve

capabilities, decision-makers can access the necessary insights to make informed

decisions.

10. Data Security

As data continues to flood in, security threats are becoming more advanced and frequent.

Transitioning to a zero-trust framework, where strict identity authentication is prioritized,

can help maintain security without compromising data accessibility.

11. Organizational Alignment

Identifying data ownership in a collaborative environment has become crucial which

requires organizations to establish clarity in data governance. As organizations implement

data product-centric models, they can achieve better alignment and foster collaboration

across various functions.

12. Data Culture

Organizations must work to shift away from the HiPPO (Highest Paid Person’s Opinion)

mentality and cultivate a culture that prioritizes data-driven decision-making. This involves

enhancing data literacy and normalizing data usage across all levels.

13. Data Talent

As the landscape shifts, the search for specialized data talent has become critical. The future will not only require technical skills but also problem-solving abilities and the

creativity to communicate effectively through data.

Tech Stack: Key Differences of Traditional Vs Modern Vs Data-First

Benefits of a Modern and Data-First Stack:

1. High Quality of Unified Architecture: A unified architecture streamlines the data

ecosystem by minimizing unnecessary components. High internal quality accelerates

feature delivery by reducing complexity. More tools introduce more debt, while a unified

approach enables a single management plane with a minimalistic set of modular building

blocks. These loosely coupled yet tightly integrated components support flexible data

applications, allowing users to focus on data rather than navigating fragmented systems.

root cause analysis, control over data quality, governance, and security is now achievable

through data contracts that is an agreements between producers and consumers of data

that enforce expectations without disrupting existing infrastructure.

3. Faster Time to Insights: A Data-First Stack requires a gradual start, but once the initial

phase is tackled, its value becomes clear. True to its name, it prioritizes data and metrics,

directly aligning processes with business outcomes for immediate impact.

4. Scalability: Easily adapt to growing data volumes without significant infrastructure

changes.

5. Improved Collaboration: Easier access to data for diverse teams with self-service

capabilities.

Data - First Architecture Diagram:

Data - First Architecture Diagram

Conclusion

The USF provides organizations with a structured approach to navigating the complexities

of the data landscape. By addressing both the physical and logical challenges within the

data environment, organizations can break free from the Data Paradox. By employing this

thirteen-component framework, companies can thrive in the data-first world and be well-

equipped for the evolving challenges of the AI age.

Let’s harness the power of data together at Polyxer!

This article also available here