Top Tools for Big Data Optimization

In today's fast-paced business environment, business agility is required to gain a competitive advantage as it is imperative for survival. The ability to adjust to rapid market conditions, customers' needs and emerging opportunities relies on valid and authenticated data and practical analytics. Data warehouse, as a centralized repository of integrated data, plays an important role in enabling business agility by enabling decision making, performance monitoring, and strategic planning.

However, traditional data warehousing approaches often struggle to keep pace with the growing velocity and volume, complex analytical demands and the requirement of real-time insight. In fact, to unlock professional agility, organizations must optimize their data warehousing architecture, processes and technologies to be scalable, flexible and responsive.

Key statistics shows the optimized data warehousing for enhanced business agility:

- According to Research Gate, organizations integrating machine learning (ML) into cloud data warehouses reported up to 40% reduction in query latency, leading to faster decision-making and better real-time analytics capabilities.

- As per Research Gate, incorporating in-memory processing and columnar storage helped enterprises accelerate real-time analytics workloads by up to 70%, supporting agile decision-making.

- According to Allied Market Research, the global data warehousing market was valued at $21.18 billion in 2019 and expected to reach $51.18 billion by 2028, with a CAGR of 10.7%—driven by the increasing demand for real-time analytics and agility in decision-making.

- Smart indexing strategies led to 20–30% faster data retrieval from large-scale warehouses, improving user satisfaction and data accessibility. (Source)

-

As we move forward, we will explore strategies, technological advancements and best practices to customize data warehousing, emphasizing how such optimization translates into tangible business agility- fast decision cycle, improved collaboration, and an active stance in a dynamic market.

The Imperative of Business Agility

Business agility refers to an organization's ability to understand the change in market and respond immediately with strategic and operational functions. It is characterized by flexibility, speed and resilience. In this era of data, agility depends on rapid technology and processes that provide data-powered insights to decision makers quickly and accurately.

Data as the Backbone of Agility

The data equips leaders and teams with a factual basis to validate assumptions, evaluate scenarios and innovate boldly. Without customized data warehousing, data retrieval can become a bottleneck, limit responsiveness, and leave organizations reactive rather than proactive.

Traditional Data Warehousing: Constraints on Agility

The early generation data warehouse was designed for a stable environment with well-defined requirements and batch-oriented data processing. They often include rigid schemas, long ETL processes, and complex transformations that delay data availability.

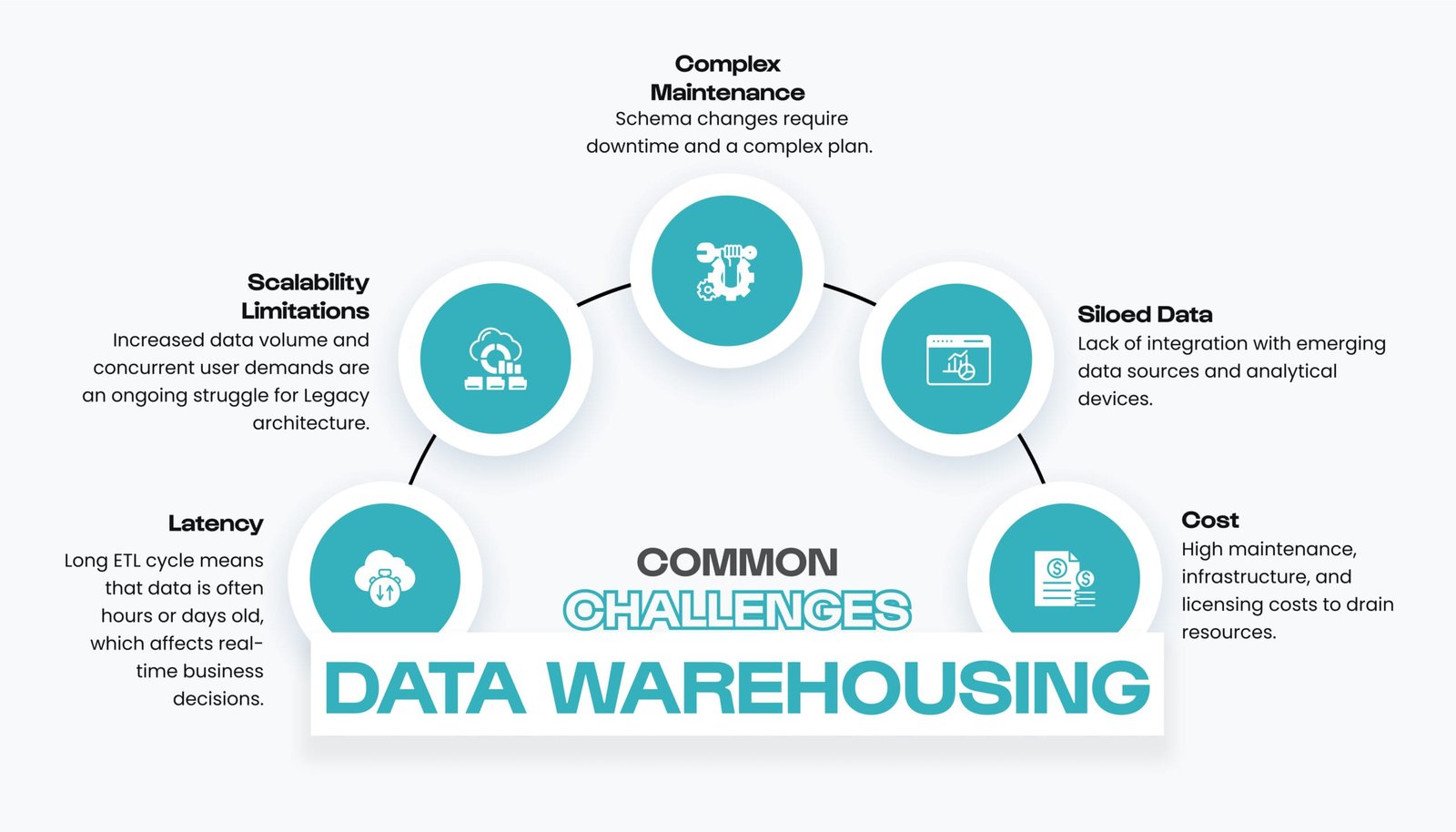

Common Challenges:

- Latency: Long ETL cycle means that data is often hours or days old, which affects real-time business decisions.

- Scalability Limitations: Increased data volume and concurrent user demands are an ongoing struggle for Legacy architecture.

- Complex Maintenance: Schema changes require downtime and a complex plan.

- Siloed Data: Lack of integration with emerging data sources and analytical devices.

- Cost: High maintenance, infrastructure, and licensing costs to drain resources.

These challenges obstruct the ability of an organization to achieve the insight required for agile business responses.

Common Challenges Data Warehousing

Next-Generation Data Warehousing for Agility

To boost business agility, data warehousing must evolve. Major changes include moving towards cloud-based architecture, adopting flexible schema, enabling real-time data access, and integrating advanced analytics.

1.Cloud-Native Architectures

Cloud data warehouses such as Amazon Redshift, Google BigQuery, Snowflake, and Azure Synapse provide scalable, elastic environment that adjusts resources to meet the demand dynamically.

Benefits:

- Rapid provisions and scaling.

- Upfront reduced cost and operational complexity.

- Integration with cloud-natives analytics and AI services.

- Global access facilitates decentralized decision making.

- Reduction in system downtime.

- Improved performance.

2.Schema Flexibility and Data Modeling

Traditional rigid schemes are being complemented or replaced by flexible approaches such as Data Lakes, Data Lakehouse and schema-on-read models.

Approaches:

- Hybrid Models: Combination of raw data storage of data lakes with warehouse performance.

- Data Vault and Dimensional Data Models: Improves adaptability and audits.

Flexible schemes support recurrence development and adjust to changing business needs rapidly.

3.Real-Time and Near Real-Time Data Ingestion

Modern systems support IoT devices, applications and third-party platforms directly into data warehouses or streaming data in lakes.

Technologies:

- Kafka, Kinesis, Apache Pulsar.

- Change Data Capture (CDC) tools for syncing operational databases.

Rapid data availability enables timely insights and decisions.

4.Automated Data Pipelines and Orchestration

Automation reduces errors and intensifies data movement and change processes.

Tools:

- Apache Airflow

- Prefect

- AWS Glue

- Azure Data Factory.

Automated pipelines enable continuous integration of new data sources with transformations and minimal manual intervention.

5.Advanced Analytics Integration

Embedding machine learning allows the predictive and prescriptive abilities directly with traditional BIs in learning and AI warehousing environments.

Examples:

- Running ML models in Snowflake, BigQuery ML, or Azure Synapse.

- Automated anomaly detection and forecasting.

Next-Generation Data Warehousing for Agility

Industry Examples of Big Data Optimization Tools in Action

1. Netflix - Apache Spark and AWS

Netflix processes the petabytes of daily streaming data to customize content recommendations and delivery. By leveraging Apache Spark and AWS cloud resources, Netflix receives low-latency analytics and scalable machine learning training, ensuring a seamless viewer experience.

2. LinkedIn - Apache Kafka and Samza

LinkedIn handles the immense real-time data flow using Apache kafka for Apache Samza for stream processing. These tools support the monitoring of the system at a scale with targeted advertising, news feed privatization, and customized delays and fault tolerance.

3. Uber - Presto and Apache Flink

Uber uses Presto for interactive SQL analytics in vast dataset and an Apache Flink for real -time data streaming analytics, enhancing driver matching, increases surge pricing, and detects fraud with customized resource use and agility.

Best Practices for Optimizing Big Data Initiatives

- Automate and Orchestrate: Use the workflow management tool to reduce manual stages and handle complex dependencies.

- Focus on Data Quality Early: Invest in validation and cleaning to prevent wasted analytics effort on poor data.

- Leverage Cloud Elasticity: Use cloud services for dynamic calculations and storage as data grows, and analytics fluctuate.

- Implement Data Governance: Enhance trust with metadata management, lineage tracking and safety control.

- Continuously Monitor Performance: Use monitoring equipment to identify bottlenecks, optimize resource uses and ensure SLAs.

- Empower Teams: Provide training and foster support between data engineering, science, and business units.

Best Practices for Optimizing Big Data Initiatives

The Future of Big Data Optimization

This field develops rapidly with emerging trends:

- AI-Driven Automation: Intelligent optimization of data pipelines and query schemes.

- Lakehouse Architectures: Combination of flexibility of data lakes with performance of warehouses.

- Edge Analytics: Data processing close to its source to reduce latency.

- Federated Analytics: Secure analysis of data across distributed environments without centralization.

- Quantum Computing: Assurance to accelerate complex analysis of tasks beyond traditional boundaries.

The Future of Big Data Optimization

The Ending Note

Customization of big data operations is required for organizations, which are efficiently and competitively targeting to capitalize on their data assets. The ecosystem of Big Data tools is rich and diverse, customized to storage, processing, orchestration and monitoring as per various requirements.

Entrepreneurs can create scalable, strong and cost-effective big data pipelines that enable real-time insight and drive transformative consequences, understanding the capabilities of these tools and aligning them with business and technical requirements.

Staying abreast of innovation and following the best practices will make organizations capable of meeting the challenges of the growing data landscape. Embracing the right optimization tool is an important step in navigating the complex journey of success.

This Article is also here