The Future of Data Science: Important Emerging Trends

In the current landscape, data is treated as fuel which is required by organizations to develop optimized workflows that maximize its potential. Effectively managing and processing this data is crucial for gaining insights and staying competitive. The staggering increase in big data leads to businesses encountering challenges regarding the management along with processing and analysis of large information volumes. Effective big data workflow optimization remains the primary focus since optimized operations result in both reliable performances along with scalability features.

According to Fortune Business Insights, the market size of global big data analytics was valued at USD 307.51 billion in 2023 and is expected to grow up to USD 924.39 billion by 2032, which shows the CAGR growth of 13.0% during the forecast period.

As we move ahead, we will delve into the intricacies of big data workflows, explore the challenges faced in their optimization, and understand strategies and best practices for optimizing big data workflows to ensure that teams can effectively transform raw data into actionable insights.

Understanding Big Data Workflows

A clear understanding of big data workflows needs grasping of following optimization strategies. A big data workflow includes all stages involved in gathering data and its storage and subsequent processing while accomplishing analysis functions. The workflows function to process data collections when they show the characteristics of big data's four primary elements called the four Vs- volume, velocity, variety, and veracity. A big data workflow contains four essential components which are:

-

Data Ingestion: Collecting data from various sources such as IoT devices, databases, or external APIs.

- Data Storage: The ingested data gets stored as part of data storage procedures in data lakes as well as data warehouses and databases.

- Data Processing: This stage turns raw input data into usable forms by implementing operations like data clean-up and data aggregation or data value enhancement.

- Data Analysis: The analytical techniques which process insights include basic statistics and advanced machine learning.

- Data Visualization: This stage includes the data in a visual format for easy interpretation and decision-making.

- Data Governance: This stage encapsulates the ensuring of data quality, security, compliance, and accessibility throughout the workflow.

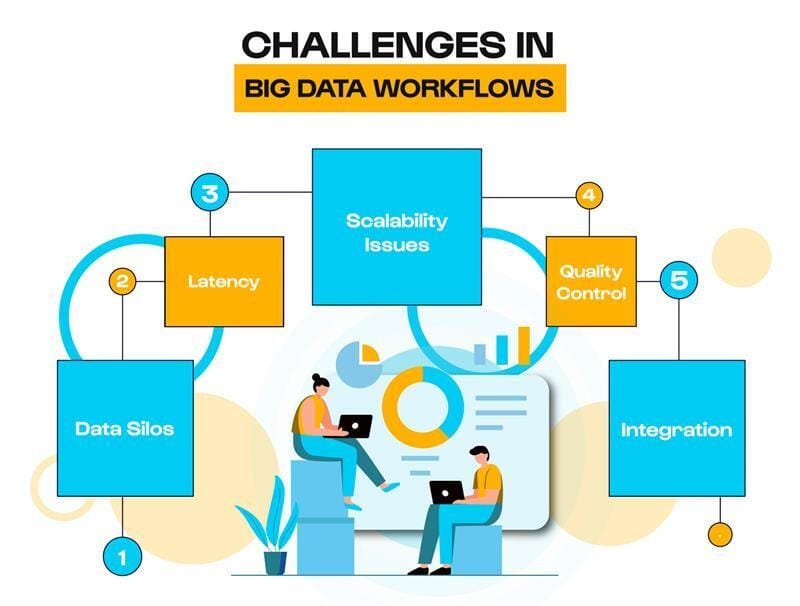

Challenges in Big Data Workflows

Utilization of Big data has significant advantages but has multiple challenges at the same time.

- Data Silos: Fragmented autonomous datasets create barriers to obtaining complete knowledge about business operations.

- Scalability Issues: Data scalability becomes a challenge since increasing workflow capacity requires proper upscaling procedures while maintaining performance quality.

- Latency: Delays occur when data processing becomes slow leading to obsolete information.

- Quality Control: Quality control is a critical yet difficult task for organizations as it includes maintaining accurate and reliable data records.

- Integration: Integration is a challenge in big data workflow because companies must unite multiple data platforms during their workflow operations.

The market analysis conducted by Markets and Markets shows that Data Science Platform generated $95.3 billion in revenue during 2021. The projected revenue for 2026 draws a value of $322.9 billion. The market projections indicate an annual growth rate of 27.7% during the 2021-2026 time period. The base year is 2020 alongside market evaluations from 2018 to 2026.

Challenges in Big Data Workflows

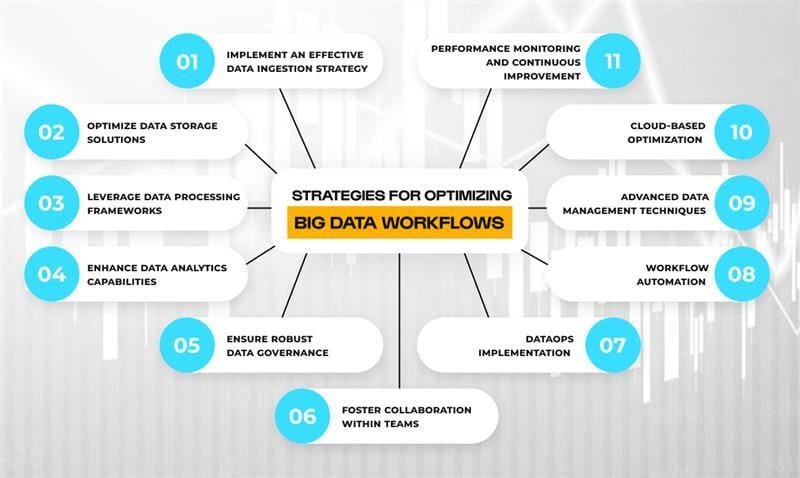

Strategies for Optimizing Big Data Workflows

1. Implement an Effective Data Ingestion Strategy

Any big data workflow requires a dependable data ingestion approach to establish successful operations. Organizations should:

- Utilize Batch and Stream Processing: Combine Batch and Stream Processing approaches to handle large batch data while using stream processing for getting instant insights. The pitfall of stream processing is resolved by implementing Apache Kafka with its streaming technology as well as the batch processing capabilities found in Apache NiFi.

- Automate Data Ingestion: Tools for automated data intake eliminate human mistakes and expedite information streams to become ready for analysis at required times.

- Prioritize Data Quality: Executions of automated quality validation processes during the data ingestion stage should be implemented for early detection of quality problems.

2.Optimize Data Storage Solutions

Potential performance improvement of big data workflows heavily depends on selecting the appropriate storage solution.

- Adopt Data Lakes for Scalability: Businesses should use data lakes as their scalable storage option to manage both structured and unstructured massive datasets at reduced costs.

- Use Data Warehousing for Analytics: Data warehouses such as Amazon Redshift and Google BigQuery let users perform quick big data analysis by effectively organizing their data resources.

- Implement Data Partitioning: Database partitioning done by keys helps to optimize query response times while decreasing data segment retrieval duration.

3.Leverage Data Processing Frameworks

Since efficient data processing leads to timely insights, organizations rely on this aspect for successful information delivery.

- Use Distributed Computing: Big data goes through increased speed during processing by implementing Distributed Computing through Apache Spark or Hadoop framework distribution methods across multiple nodes.

- Automate Workflows: The data processing task benefits from the use of orchestrators including Apache Airflow and Prefect because these tools automate workflow execution while providing control over timed scheduling and status checks.

- Optimize Job Configurations: Achieve best job performance through resource adjustments that match the particular requirements of your workflows.

4.Enhance Data Analytics Capabilities

Organizations must use effective analytics techniques to generate value from their data.

- Utilize Advanced Analytics Tools: Organizations should adopt sophisticated analytical instrumentation using R, Python or Tableau and matching data visualization systems to analyze data thoroughly.

- Employ Machine Learning: The implementation of machine learning algorithms will help identify data patterns and accelerate automation of predictive models while motivating improved decision platforms.

- Focus on User-Centric Dashboards: The implementation of user-oriented dashboards offers vital information through visually straightforward displays which fulfill user requirements.

5.Ensure Robust Data Governance

Organizations need to establish a data management framework that combines both data quality preservation and regulatory compliance to succeed.

- Establish Clear Data Policies: Organizations must create specific data policies which define how staff access information together with their rights regarding sharing and data protection measures.

- Implement Metadata Management: Organizations should deploy Metadata Management since it helps users detect the original data sources and their meaning while making data more accessible.

- Regularly Monitor and Audit Data Workflows: Development teams need to carry out regular monitoring procedures which allow them to detect possible workflow problems ahead of time while also guaranteeing compliance with standards.

6.Foster Collaboration within Teams

Stakeholder cooperation among teams leads to more efficient and innovative big data processing systems.

- Encourage Cross-Disciplinary Teams: Data scientists should work alongside business analysts plus IT professionals in cross-functional teams to use diverse expert skills during data project development.

- Conduct Regular Training: The organization needs to provide regular training sessions that develop team skills about modern data science tools alongside new technologies and methods.

- Cultivate a Data-Driven Culture: Every organizational decision should rely on data as the main source for making better choices through fostering a data-driven approach that merges with operational processes.

7.DataOps Implementation

A combination of data engineering, data integration, data quality, and agile software development along with DevOps practices is termed DataOps and is aimed at improving the speed and quality of data analytics. By fostering collaboration between data engineers and scientists, data workflows can lead to better automation and efficiency of data workflows. Essential concepts are:

- Continuous Integration and Deployment: Incorporating new technologies and insights into previously established data processes regularly.

- Automated Testing: Where data integrity is guaranteed through automated validation checks.

- Monitoring and Feedback Loops: Constant evaluation of workflow efficiency for possible improvement.

8.Workflow Automation

As with everything else, automation in systems and computing involves the extensive use of technology to reduce human intervention in specific activities. Automating repetitive tasks reduces the need for user involvement, limits errors, and reduces the time needed for processing. For efficient workflow automation, these steps shall be followed:

- Mapping Existing Workflows: Identify what processes are already in place so that automation of what is possible can be achieved.

- Starting Small: Focusing on the automation of small and easier tasks to create a foundation from which more intricate tasks can be built later on.

- Prioritizing User-Friendly Tools: Placing emphasis on those tools that allow for smooth interfacing and integration with current systems.

9.Advanced Data Management Techniques

The successful implementation of advanced data management techniques leads to great performance of data workflows:

- In-Memory Data Management: Using in-memory data management could be done via distributed in-memory databases.

- Efficient Data Partitioning: Efficient data partitioning indicates breaking a range of huge data into smaller pieces for easier handling of the work.

- Optimized Query Execution: Refine database queries to reduce the time taken and cost incurred.

10.Cloud-Based Optimization

Cloud platform-based is a favorable choice as it provides flexibility and scalability for big data workflows. While some good practices would include:

- Selecting Appropriate Cloud Services: Select cloud services which align with organizational needs as well as workload types.

- Resource Configuration: Cloud instance configuration requires customization for cost and performance scaling.

- Data Localization: Streamlining data with regards data localization involves the ideal approach for the storage and processing to be near regions for compliance and avoiding issues with latency.

11.Performance Monitoring and Continuous Improvement

Routinely checking the workflow performance leads to identifying inefficiencies and opportunities for improvement. This approach includes:

- Defining Key Performance Indicators (KPIs): Defining metrics through which workflow performance can be inferred of completion time and efficiency use/cost.

- Continuous Monitoring: Implementing various tools keeps user updated on the workflow in real-time.

- Iterative Optimization: Performance-based data used for making corrections to the workflows.

Strategies for Optimizing Big Data Workflows

Real Life Case Studies: Successful Workflow Optimization

Big Data workflows have changed several sectors by means of which enormous data sets can be processed and analyzed to drive informed decision making. The following two real life case studies which highlights how Big Data workflows are applied:

1.Walmart's Demand Forecasting with Dask

One of the largest retailers in the world, Walmart operates a vast inventory over many sites, so exact demand forecasting is required to guarantee product availability and operational efficiency. Walmart used Dask, a parallel computing library in Python, to strengthen its demand forecasting ability and so meet this difficulty.

Implementation Details:

- Data Volume: Walmart creates large quantities of information needing effective management from roughly 500 million store item combinations their data processing handles.

- Technological Stack: Walmart reportedly attained substantial speed improvement in their data processing operations by combining Dask with RAPIDS and XGBoost, hence reaching perhaps 100x.

Benefits Achieved:

- Enhanced Forecasting Accuracy: Dask's sophisticated analytics allow more specific forecasts of product demand, therefore reducing overstocking or understocking incidents.

- Operational Efficiency: Faster data processing helped timely decision making, so maximizing stock management and supply chain operations.

Using big data workflows with tools like Dask can help retail businesses tackle operational results.

2.Genomic Data Analysis with Nextflow

The complexity and volume of the data in genomics analysis requires scalable and repeatable procedures in the discipline of genomics given the nature of the data. To solve these issues, many businesses have embraced Nextflow, a workflow management system.

Implementation Details:

- Adoption by Research Institutions: Nextflow is the preferred system of processing sequencing data used by organizations such as the Centre for Genomic Regulation and the Francis Crick Institute.

- Community Collaboration: Nextflow’s community project nf-core prioritizes sharing and curation of bioinformatic pipelines to guarantee reproducibility and portability across several computational networks.

Benefits Achieved:

- Reproducibility: Nextflow's approach allows for the development of processes that can consistently be followed across many platforms, therefore boosting the reliability of genomic analyses.

- Scalability: The system effectively controls big data processing projects, therefore allowing for the large datasets usually found in genomic research.

Particularly in handling and analyzing complex biological data, this case study shows the important contribution of Big Data workflows in moving scientific research ahead.

Hence, the above case studies show how Big Data operations can change many industries from retail to scientific research by means of practical data processing and perceptive analysis.

Conclusion

Optimizing big data workflow is a multilayered task that demands a systematic approach, powerful tools, and a cooperative culture if one wants to get big data processes right. Following the approaches above will enable businesses to improve their workflow, stimulate creativity, and keep competitiveness in a quickly changing data world. Organizations will be guaranteed to stay competitive in the ever-changing data terrain by staying abreast of new developments and continuously improving their processes.

The article is also available here